It is very easy to find yourself with some siloed data; a specific question may have been asked by the business and to answer the it, a data export from one of your core applications is required to conduct some analysis.

Here is a possible scenario where this could quite easily happen:

Responding to the request a business user exports some of the data, probably via an Excel workbook. The analysis is conducted, but some additional contextual data is required to complete it. So, the business user adds an additional column or two and before you know it, they’ve essentially created a database*.

This database is then shared (probably via email) with a community of business users to populate the extra column(s). Once the (now multiple) spreadsheets have been updated by colleagues across the business, it is noted that some of the other values from the original export have been changed as business users noticed errors.

There is now a subset of data that may meet a specific and immediate need, but it is not the same as what is in the master repository. If some of the data on the Excel workbook has been updated and the core system not, then you will now have 2 versions of the "truth".

If business users continue to update the Excel document and not the core system, then the problem is exasperated even further; the data that you have may now be the most current and you are now preventing other business users from accessing it.

You may now be thinking that this data should be appended back to the original database overwriting what is there. But how can you be certain that what now exists in the Excel workbook is the most current? Whilst the data has been isolated from its home system, you cannot be certain that other business users haven't accessed the master records and changed them. You now run the very real risk of overwriting business critical data.

This is not an uncommon situation. This piece of analysis was conducted to answer a specific business query and now there are potentially 2 versions of the truth with no idea which is the most current and no real plan on how to get back to the status quo.

Another example could be the creation of a new app, or maybe a data collection form, which gathers some data that has real value to the business. But this method of gathering data is keeping it isolated from the core enterprise system(s).

Again, similar problems – this data could very well have value to other business users if they knew that it existed and that they could access it, and invariably it also contains some information which is a duplicate of some data contained elsewhere – personal details for example.

So, how do you get round these problems?

A custom enterprise application that is easy to configure is your solution. The trouble with enterprise solutions based on an off-the-shelf package, is that it is created on an ideal or example process; but your business is unique. Purchasing a standard solution may not provide you with sufficient flexibility in the future when your processes change.

Gartner recognise this and in a recent article** they stated:

“Organizations have become burdened by full-scale applications, like today’s ERP and CRM offerings. Their size, complexity, inflexible user experience and internal entanglement create a monolithic effect: high costs, difficult-to-maintain customizations and slow innovation all act as barriers on the roadmap to digital transformation and the composable enterprise.”

This is something that we have previously addressed in our "COTS or custom" blog, which you can read here.

A unique business needs a unique solution. A custom application built by datb will be tailor-made to your business needs. But, when you change your process, or need to gather additional data, whether that is via an online form, or directly keyed into the application, you can modify the model and add new database attributes.

As the data is temporal, this change won’t affect the current records, even if the new fields now form part of the minimum data set.

Moreover, with built-in analytics you don't need to take an off-line dataset.

The reasons to export data from application now evaporate. You can still meet the new business challenge, but as the data never leaves the application no data silo is created!

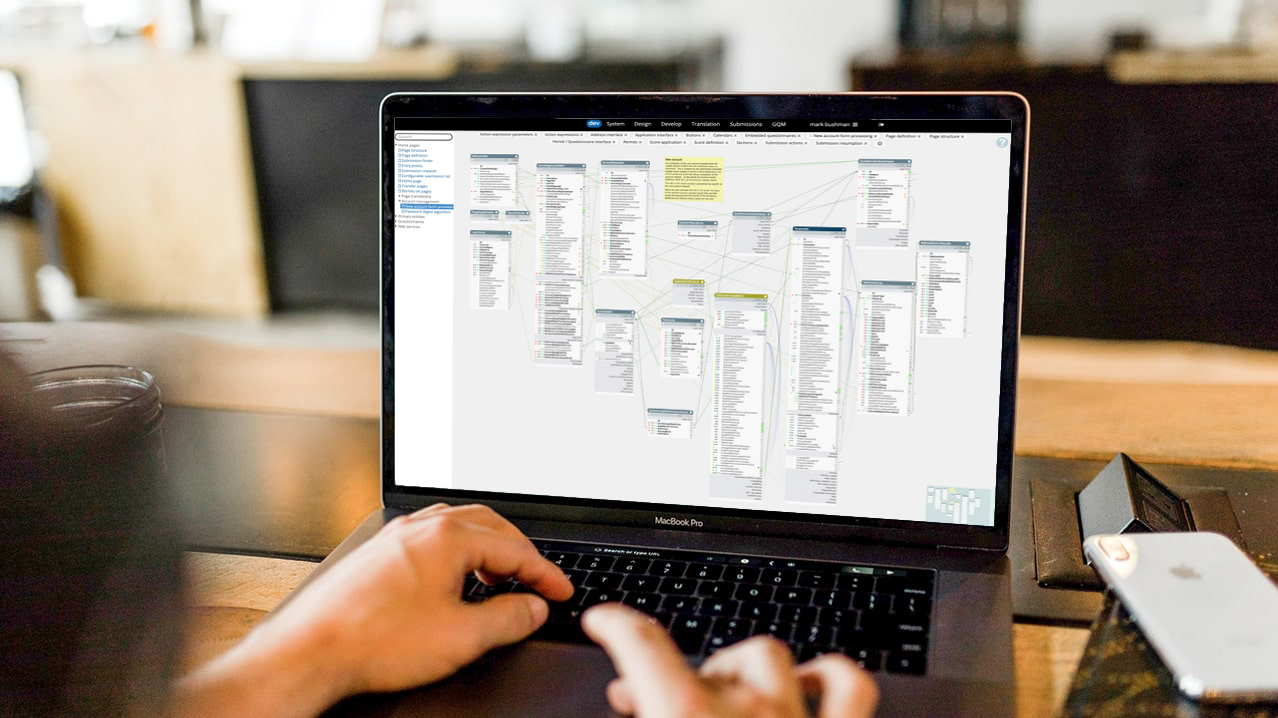

Applications built by datb are delivered via our proprietary development platform. This stores a representation of the data as a model, in the metadata. Because of this, modifications to the database take just a few minutes, meaning that your new data gathering and analysis project is easy to deliver without causing problems down the road.

Find out more about kinodb, the development and deployment platform here.

*You may also be interested in the bog post "Microsoft Excel is NOT a database", which you can read here.

* *Innovation Insight for Composable Modularity Of Packaged Business Capabilities, March 2021.